Planning for Analytics at your Organization

Microsoft is Ready to Unify Self-Service BI with Enterprise Models

There’s an AI for That…Kind of!

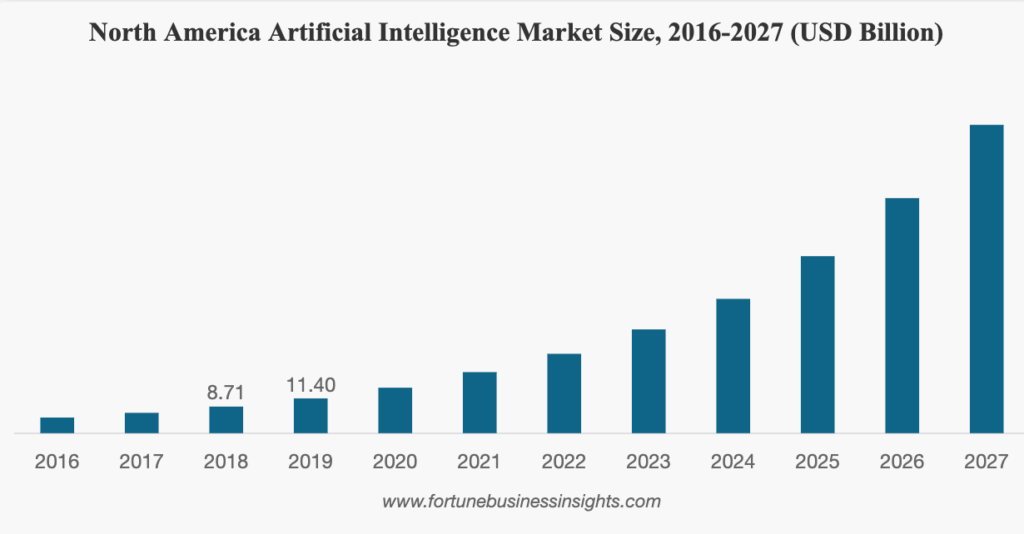

We keep hearing about AI everywhere we turn, and it always comes with hard-hitting stats like “The global artificial intelligence market is expected to reach $267 Billion by 2027” (Fortune Business Insights, 2020). Or this one, “The number of businesses adopting artificial intelligence grew by 270% in four years” (Gartner, 2019). This all sounds amazing but what does it really mean? Well these two quotes actually refer to two completely different things: AI Companies vs. Companies that use AI – and there is a difference.

The global artificial intelligence market consists of a few different components. There are the tools and platforms that are used for AI, services-oriented towards building AI applications, and of course the advancement of Cloud AI. This is what drives the digital economy, and seeing as how you landed on this blog post, you should be rightly be expecting your Instagram ads to start featuring Microsoft Azure, Amazon Web Services, Google Cloud Platform, and IBM Cloud. All of these public clouds offer their own flavour and set of AI services that allow you to utilize pre-trained models to conduct specific use-cases such as speech-to-text, text-to-speech, computer vision, or virtual assistants. AI is where these tech behemoths are placing their bets, bolstering their investments by releasing vast amounts of material to drive and support AI adoption (e.g. The AI Ladder by IBM).

Companies like DataRobot were born, which aim to make AI available to non-data scientists by providing automated machine learning in (AutoML) in a friendly interface. These big cloud platforms, the corresponding mountains of free strategic content, and innovative companies like DataRobot make up the “global artificial intelligence market”.

This market sets the stage for a new opportunity, where thousands of start-ups seek to apply AI and ML in new and exciting ways to serve some niche in the commercial marketplace. However, those organizations face an inherently uphill battle.

One of the most complete articles I have ever read on the topic of AI businesses is “The New Business of AI (and How it’s Different From Traditional Software)” by Martin Casado and Matt Bornstein. They seek to explain exactly why we hear about so many AI start-ups but so few of them rise to prominence or are able to deliver on the promise of the revolutions featured in their VC funding presentations.

They point to three main issues that are inherent to building an AI company:

Low gross margins are the new norm. It takes heavy cloud infrastructure usage to crunch through terabytes of data to train AI models; then, even more computing power to continuously processes terabytes of data that customers push through the models once they are operational. That is a lot of storage, and even more compute-related costs. This is a bit of a self-fulfilling prophecy, since many AI use-cases are only possible due to the proliferation, continued growth and ‘economies of scale’ associated with public cloud offerings. Many AI use cases also rely on keeping humans in the loop to ensure accuracy and effectiveness of the ML pipeline. This is especially true in cases that carry risk, such as self-driving cars having remote control operators, social media moderators that oversee the AI algorithms, and medical devices that still rely on doctors to make the final call.

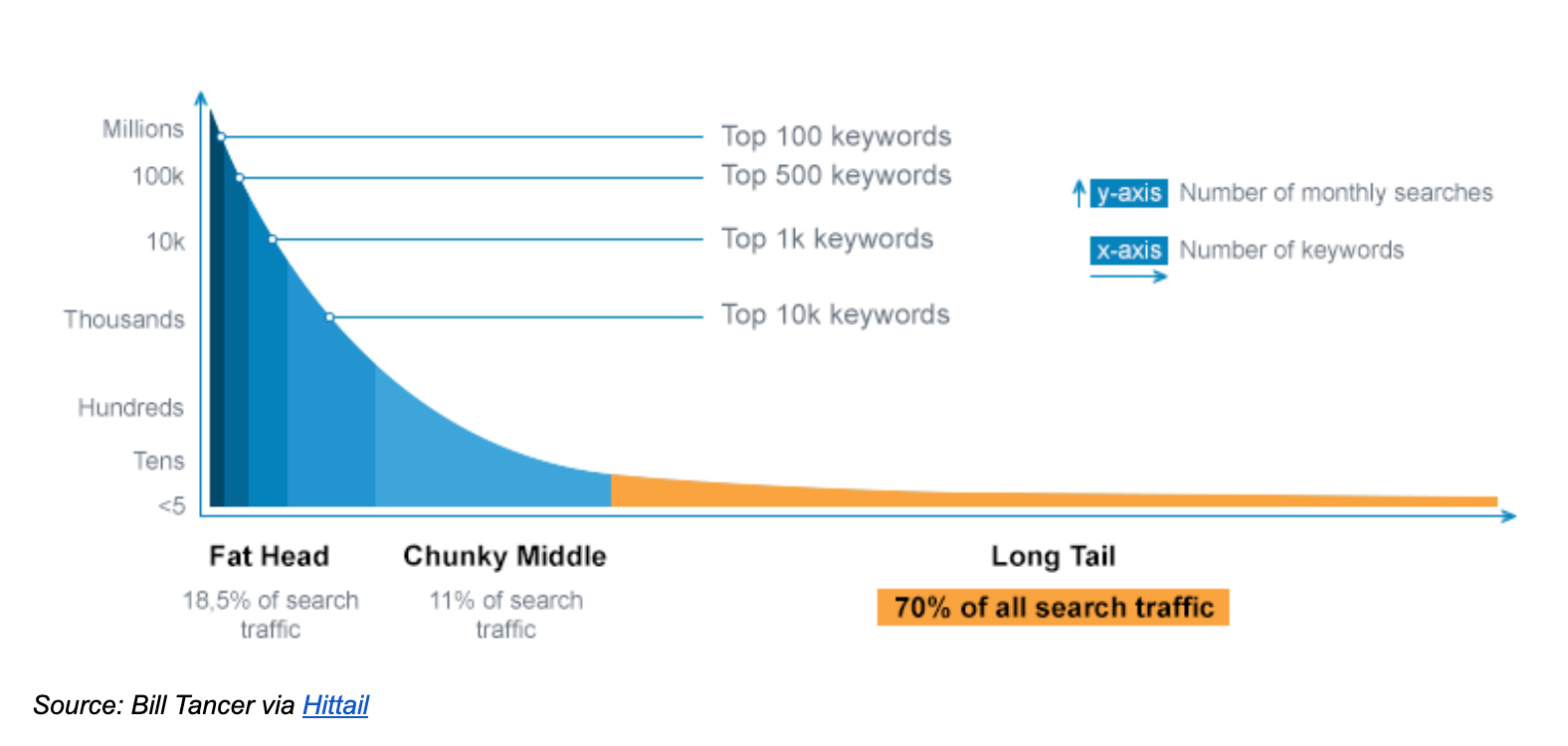

Another plague of the AI business is the never-ending long tail of edge cases. A lot of AI applications must work with very noisy data, or even unstructured data such as text, images, or voice recordings. Most of us can be forgiven to expect human/superhuman capabilities from AI in these cases; after all, these things seem so easy to us as humans. Many of these use cases are harder to pin down, even logically, and that is why many applications of AI that end up in the long-tail of edge cases begin to balloon and creep, in both time and scope.

The last and final issue that is inherent to AI business is right in the definition of machine learning. “Machine learning is a way to use standard algorithms to derive predictive insights from data and make repeated decisions”. The keyword there is “standard”. Because so much of machine learning and AI is built on open-source technology and standard algorithms, there’s a relatively small set of libraries that almost everyone uses. There are the basics like sklearn, keras and tensorflow (the de facto standards), and a relatively limited number of parameters to tweak in most algorithms, unless you have an advanced degree in mathematics or machine learning itself. This leads to very weak defensive moats for AI companies – if the algorithms are “standard”, then the models you can train from them are really only as good as the data upon which the models were built.

Further to that, many AI companies attempt to offer their product as Software-as-a-Service (SaaS). This is great for recurring revenue but adds a layer of complexity for customers who might be bringing their own data. The result is inconsistency, often meaning wild variations in outcomes and experiences between Customer A and Customer B.

Unless AI start-ups can tackle these issues in the near term, the lion’s share of the growth in the global AI market is likely to be captured by the big cloud vendors and autoML platforms. The services offered by cloud vendors and ML/autoML platform vendors will, in turn, feed the second statistic in our introduction – the number of businesses adopting AI as internal capabilities, rather than turning to SaaS AI offerings.

For those looking to develop AI capabilities in-house by leveraging the growing AI market, here are a few things that we should consider. We must accept that AI will require significant cloud investment to train our models and deploy them. Organizations that do not rely on serving AI to their customers are at an advantage. They are able to create models that are very specific to their data and yield greater results for themselves. In this “internal” scenario, we are not worried if the model will work on anyone else’s data – we just care about our data.

However, we should carefully weigh the advantages and limitations of our AI applications, especially in scenarios where we want to replace human effort. More often you will be able to replace only a part of the process with AI, allowing it to work alongside your employees. Think of this as Augmented Intelligence. Lastly, the industry-standard models are a great boon for in-house models because we can start developing a use case very quickly. Don’t run out hiring an ML Engineer right out of the gate; instead, start with a team of Data Scientists and prove that your use case holds water before investing in a year-long development cycle. AI is a science, and science is about experimentation.

The global AI marketplace is growing, that’s a fact. But the areas that are enjoying the most growth are the Cloud AI and Assisted Modeling solutions that focus on scaling applications of AI. This presents an opportunity for all of us to start dipping our toes into the world of AI with surprisingly low barriers to entry.

WRITTEN BY:

|

STEVE REVENKO, TECHNICAL ACCOUNT MANAGER

|